Pervasive intelligence is shaped by artificial intelligence, silicon proliferation, and software-defined systems. Autonomous robots and vehicles, AR and VR headsets, IoT, aerospace, and defence systems will become more complex, integrated, and multi-physics. Many of these systems have optics components, such as optical sensors, displays, lasers, and cameras. The Synopsys Optical Solutions Group is a valued partner for innovative optical systems design and simulation, empowering their customers with trusted solutions. The group is a leader in developing optical design and analysis tools that model all aspects of light propagation. Its comprehensive software packages enable optical engineers to specify, design, analyse, visualise, and virtually prototype manufacturable optical systems for applications across various industries.

Synopsys acquired Optical Research Associates (ORA) in 2010, marking the beginning of the Synopsys Optical Solutions Group. In 2012, Synopsys acquired RSoft Design Group to provide a full spectrum of photonic and optical design solutions. In 2014, Synopsys acquired Brandenburg GmbH, an automotive lighting design software maker. In 2020, Synopsys acquired Light Tec, a global provider of optical scattering measurements and measurement equipment. Today, the Synopsys Optical Solutions Group provides a trusted, end-to-end design portfolio across the entire spectrum of optics, with high-performance computing tools (such as cloud and GPU) and efficient, seamless workflows to help customers achieve breakthroughs in the development of macro- to nano-scale optical systems.

The Synopsys Optical Solutions platform

At the heart of Synopsys' offerings in this area are its innovative software packages, including CODE V imaging design software, LightTools illumination design software, and LucidShape products for automotive lighting. The RSoft Photonic Device Tools offer an extensive portfolio of simulators and optimizers for both passive and active photonic devices, such as lasers and VCSELs, and are integrated with Synopsys optical and semiconductor design tools (Synopsys TCAD) for multi-domain co-simulations. In addition, customers have the option to purchase equipment or services from Synopsys to measure any optical materials and import custom data into Synopsys optical software tools for high-accuracy optical product simulations and visualisations.

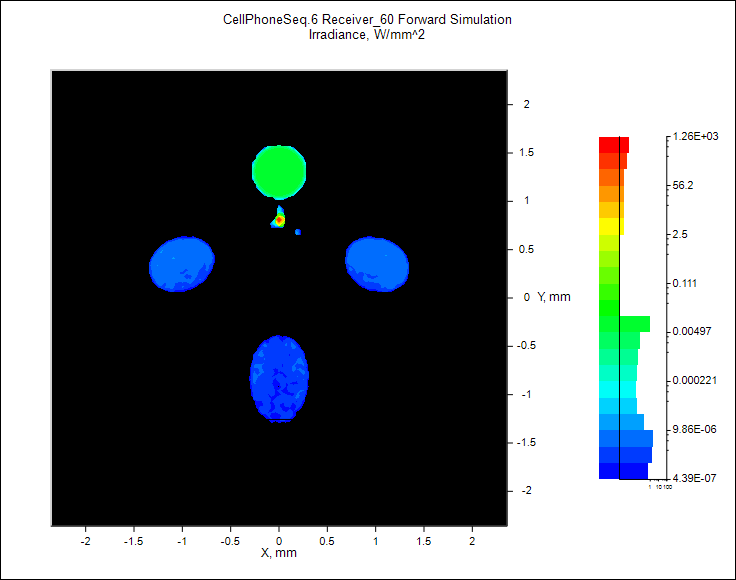

Pictured above, cell phone pancake lens modelled in LightTools with diffraction distribution simulated in RSoft DiffractMOD RCWA (Credit: Synopsys)

Emilie Viasnoff, head of Optical Solutions at Synopsys explains: "Our software portfolio provides a complete platform for designing and simulating optical systems and components. Given the growing complexity, miniaturisation, and integration of optical systems, the need for an optical engineer to manage multi-scale systems is more important than ever. Our customers are increasingly using not only one tool from our portfolio but very often two, if not three, tools. That has meant that we have had to build interfaces across our tools so that our customers can operate them together seamlessly to design new generations of cameras, AR/VR headsets, HUDs, and headlamps."

Optical complexity drives design

The trend toward complexity and miniaturisation has been driven by industries such as consumer electronics, as Viasnoff elaborates: "Think about the cameras in smartphones, laptops, and for autonomous driving systems or robots. Compare those cameras with traditional digital single lens reflex (DSLR) cameras, which are purely composed of optics. Now, you must embed all the optics into a super compact camera and make them work with the sensors and chips to ensure great image quality. Moreover, if you look at your smartphone, it's not only one camera but three or four or five, and all of these are supposed to take pictures of similar quality to a DSLR but leveraging the whole system - lens, sensor, ISP, chip - instead of just relying on a massive, aberration-free optical zoom. Miniaturisation and integration have made cameras pervasive beyond photography into computer vision for autonomous systems."

The integration of cameras into these pervasively intelligent systems has spurred the demand for computational photography and computer vision, which today leverage artificial intelligence (AI) to compensate for some optical limitations. Says Viasnoff: "Smartphones, laptops, and other devices now have powerful chips, which means they can use AI. These AI models can compensate for the difference between traditional larger optics and more modern metaoptics. You can get almost the same quality pictures as if you had huge optical elements. For computer vision systems, you have an AI model to extract information from the image, such as facial recognition or categorization. AI is transforming optical systems as much as other domains. As a tool provider, we aim to evolve our software packages to enable the design and simulations of these disruptive optical systems."

Future trends: Autonomous vehicles and AR

Looking to the future, Viasnoff predicts significant growth in autonomous vehicles and augmented reality (AR), which rely on sophisticated optical systems. She says, "Autonomous cars now have enough computing power to process all the data, mostly from optical sensors such as lidar, and cameras that accurately and in real-time sense the environment. On top of the remaining sensor miniaturisation, one of the challenges of autonomous cars is safety. Safety testing is currently done by having a fleet of vehicles drive millions or even billions of miles without any critical events. This is done with real cars, cameras, and hardware. AR is experiencing the same trend regarding innovation, integration, and complexity. In terms of AR systems, what has been tested so far is not always completely ergonomic. AR devices can be heavy, the image quality is not at its best, and the brightness is not always sufficient. By leveraging more and more simulations in autonomous vehicle and AR design cycles, engineers will be able to improve the safety, quality, and overall system performance of these integrated, complex systems."

As the industry moves toward virtual prototyping and simulation-driven design, Synopsys provides comprehensive solutions encompassing design, testing, and simulation. Moreover, the company is focused on a roadmap for high-performance computing and ensuring all simulations and end-to-end workflows work as much as possible in real-time.

The team at Synopsys is enthusiastic and motivated to bring these simulation solutions to customers in all industries, especially within emerging applications such as those highlighted here. Says Viasnoff, "What's exciting – given the growing complexities and the AI inflection point – is looking for all the bridges we can build to provide a comprehensive simulation portfolio to our customers, not only across our optical design tools but more broadly across Synopsys products. What I like the most is finding all the meaningful synergies we can bring to our customers to make their lives easier through meaningful, real-time simulations."

Emilie Viasnoff is head of Optical Solutions at Synopsys (Credit: Synopsys)