The ability to spot poisonous food visually sounds so miraculous it’s little wonder that it’s not part of our natural eyesight. Yet it is a possibility offered by hyperspectral imaging, and judging food quality is perhaps the most striking use driving this technology’s adoption.

That’s thanks to chemical information beyond the visible spectrum, explained Oliver Pust, vice president of sales and marketing at Danish firm Delta Optical Thin Film. ‘If you have nuts on the conveyor belt, and one nut has a toxin, it can spoil one tonne of produce,’ he said.

The spectroscopic signature resulting from the toxin’s chemical makeup is visible to hyperspectral imaging used for quality control in processing, Pust added. But bringing hyperspectral imaging into wider use is challenging for optical technology. ‘You measure at least in 2D with good spatial resolution,’ Pust said. ‘You also want to measure spectra at high fidelity and good resolution. Spatial spectral measurement has challenges.’

Such optical demands have slowed hyperspectral imaging’s growth, according to Boris Lange, manager of imaging Europe at Edmund Optics. The technology combines a two dimensional image made up with a third dimension comprising pixels spanning many wavelengths containing spectroscopic chemical information. Together, these measurements build a hyperspectral imaging data cube. The various approaches that can produce such a data cube each have their own challenges. Pushbroom systems continuously capture spectra across a single line at the same time, often being fixed while objects move past on a conveyor belt. Whiskbroom captures spectra as a sensor whisks from the top to the bottom of an image, before then moving horizontally and back to the top. By contrast, snapshot captures a single image at once. Across these approaches, new technology helps hyperspectral imaging grow.

Transmission and wavelength correction are significant challenges in each of these data collection approaches, Lange explained. The optics must span very broad colour ranges, for example from 470 to 900nm or 1,100 to 1,650nm, but perform consistently at each wavelength, so that the data cube is built up evenly. This is not typically possible with a single glass lens – but an imaging lens assembly for a hyperspectral imaging camera might contain up to ten individual lenses.

‘If you want to correct the lens over a wide waveband range, the optical designer needs to use a lot of different materials, and combine them. This drives cost, because there might be more exotic glass materials.’

Each lens element must be free of imaging errors and aberrations, to guarantee signal quality. Finally, it must transmit a high proportion of the light that enters. ‘In pushbroom imaging applications objects are moving quite fast,’ Lange said. ‘You’ve got to be able to pick up a lot of light. You have to have a high transmission, high speed lens, with an f number around f/2 or f/2.8.’

Industrial imagers

Systems that can deliver such capabilities can see and identify chemically different materials and objects, echoed Timo Hyvärinen, co-founder and sales manager at Specim Spectral Imaging in Oulu, Finland. He believes hyperspectral imaging is becoming a key vision technology in applications solving global challenges. These include recycling and waste management processes, reduction of food waste and monitoring the environment. But spectral signatures can appear in different spectral regions. Colour is obviously visible, but vegetation stress and species identification happen in the visible-near infrared and chemical imaging in near and longer infrared.

HySpex’s Mjolnir product offers good quality data in a small hyperspectral system

Hyvärinen said: ‘As a hyperspectral imager should optimally operate across a large spectral range, its optical design benefits and can be simplified by clever use of different optical materials and combination of refractive and reflective components. High performance across a broad spectral range is a key criterion for anti-reflection coatings, and in the case of reflective optics, for protective coatings, too.’

Optical design for different spectral regions also requires tailored optical materials and coatings, added Hyvärinen. ‘As another example, optical design for industrial and drone-based cameras is more cost critical than design for high resolution aerial mapping,’ he said.

Optical design and choice of detector array are key areas in hyperspectral imaging, Hyvärinen said. ‘There are more optical performance parameters to be optimised than in traditional red/green/blue or greyscale imaging,’ he said. ‘Optical design influences light transmission and collection efficiency, image sharpness, uniformity and aberrations that need to be maximised or optimised across the broad spectral region typically targeted in a hyperspectral imager.’

Specim produces hyperspectral pushbroom line scan cameras. ‘Those designed primarily for industrial on-line applications run at hundreds to a thousand line images per second with full or configured spectral band sets and a very high light collection efficiency of f/1.7,’ Hyvärinen said. His company matches optical design to the detector pixel size by meeting the Nyquist sampling criteria, specifying that the optical spot is twice the size of the detector. This minimises internal aberrations to sub-pixel level, which don’t need to be corrected for in data processing.

In this way, Specim’s FX series hyperspectral cameras were the first to meet industrial machine vision requirements for performance, compact rugged construction and cost competitiveness. The light collection efficiency is twice that of previous generation cameras, Hyvärinen claimed, enabling high-speed applications with less illumination. And manufacturing the cameras is easier, because the company has minimised the assembly and adjustment phases.

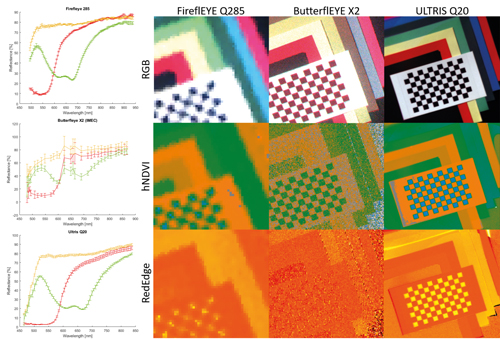

Comparison of the spectral quality of three Cubert hyperspectral video cameras. For each camera the spectra of the red, green and yellow samples are shown along with the respective noise indicating standard deviation (left). Image comparison of the three hyperspectral video cameras Firefleye~Q285, Buttefleye~X2 und Ultris~Q20. For each camera an RGB image (true colour) and two vegetation indices (hNDVI and RedEdge) are shown (right). Credit: Cubert

And now, Specim’s Fenix cameras have both visible and near-infrared and short-wave infrared imaging spectrometers, spanning wavelengths from 400 to 1,000nm and 900 to 2,500nm, integrated behind common front optics. This single instrument collects this entire spectral data range for each image pixel simultaneously. Compared to a dual camera set-up, where each camera has its own front optics, this design eliminates the extra data processing step of overlapping two images from two different cameras, Hyvärinen explained.

Collecting snapshots

Beyond line scanning, snapshot spectral imaging builds the 3D cube of images in a single moment, which makes it much easier to follow dynamic scenes. Commonly companies array wavelength filters into a grid on a detector and combine it with standard camera objective lenses. However, this often comes at the expense of spatial resolution, Edmund Optics’ Lange noted. He highlighted sensors based on this principle developed by Belgian firm Imec. The sensors restrict groups of several neighbouring pixels on silicon-based detectors to a particular wavelength. Using Fabry-Perot filters it provides sensors that can exceed 100 colours, spanning 600 to 1,000nm or 450 to 960nm off-the-shelf. As Fabry-Perot filters allow other harmonics through, Imec adds another filter to avoid cross-talk with these wavelengths.

Several smaller camera manufacturers using these Imec sensors exploit Edmund Optics’ VIS-NIR series of objective lenses. ‘They have good transmission over the entire visible and infrared range with a lot of choices, in terms of focal length,’ Lange said.

Snapshot hyperspectral images could be used when surgeons are removing tumours, said Delta Optical Thin Film’s Pust. ‘You really need to have a snapshot camera to tell you that’s where the tumour is, and that’s where healthy tissue is instantaneously,’ he said. It’s also difficult to align image series from scanning hyperspectral cameras on drones, Pust said, but it’s much easier with snapshot cameras.

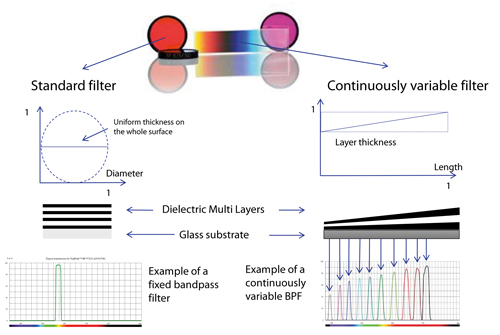

To make continuous variable filters, Delta Optical Thin Film coats materials onto fused silica substrates with gradually changing thickness. Credit: Delta Optical Thin Film

Delta Optical Thin Film’s Bifrost continuous variable bandpass filter technology is found today in snapshot hyperspectral cameras, explained Pust. To make continuous variable filters, the company coats materials onto fused silica substrates with gradually changing thickness. The thickness determines the light wavelength that can pass through each area. The approach enables very high transmission and allows more design flexibility than filters coated directly onto sensors have. ‘When you deposit directly on the chip, for patterning to enable the snapshot technology, you need to remove parts of the coating again,’ explained Pust. ‘That has a limit as to the thickness that you can remove again. We don’t have that limit without that photographic lift-off process.’

Pust said that the result surpasses rival filters, which don’t have a signal to background ratio better than 10:1. ‘With our filters, we can really block all the lines that you do not want, apart from that narrow bandpass you’re looking at,’ he said. ‘We can suppress all the other wavelengths by four orders of magnitude.’

Sensors exploiting such filters initially needed scanning systems to build up hyperspectral data cubes. But in 2018 a further advance by Fraunhofer Institute for Applied Optics and Precision Engineering, in Jena, Germany, enabled snapshot hyperspectral imaging. ‘They combined our filter in a smart way with microlens arrays,’ said Pust. That enables high-quality snapshot hyperspectral cameras, commercialised by companies such as German firm Cubert.

Innovating past the hype

For the HySpex division of Norwegian firm Norsk Elektro Optikk, the optical design goal ‘is almost always to achieve a very high performance at the pixel level’, according to Trond Løke, chief executive officer.

The quality of the hyperspectral systems it builds, such as its Mjolnir product, always begins with a high-performance optical design. ‘Before that camera was introduced, you could either have good quality data or you could have a small hyperspectral system – and we were selling only good quality systems,’ Løke said. ‘With Mjolnir it was the first time when you could obtain scientific grade hyperspectral data with such a small camera. Making the camera so small and good would not be possible without the appropriate optical design.’

Pixel-level design is important because all spectral channels in one spatial pixel must sample data from exactly the same area, so that data is spatially co-registered. ‘If you have spatial misregistration the spectral information in an image pixel is made up by parts of the spectra from spatial positions around that pixel,’ Løke added. ‘Similarly, you want any spectral channel of your hyperspectral camera to have exactly the same central wavelength throughout the entire field of view, you want your data to be spectrally co-registered.’ As such, HySpex often needs to correct aberrations on a sub-pixel level, whereas traditional imaging system aberrations are comparable to pixel size. ‘We prefer to have a wide choice of optical glass types to fulfil these very demanding image quality requirements,’ Løke said.

Specim’s FX17 camera is used in Picvisa’s machine vision solutions for waste treatment and recycling

Spatial and spectral co-registration in a real camera are never perfect, Løke admitted. ‘But you want it to be as perfect as possible,’ he added. ‘Spatial and spectral misregistration in our cameras are low, and this, in combination with very sharp optics, is one of the key advantages when using our systems. We can detect and identify significantly smaller objects than most other cameras with seemingly similar specifications.’

Consequently, high quality hyperspectral cameras are not particularly cheap, said Løke, but they can be versatile. ‘We try to create systems that can be used in a wide variety of applications,’ he said. ‘However, there are limits to that. It is unlikely that two optical systems with a 1˚ field of view and 60˚ field of view will be very similar. Also, every now and then comes an application that has strong emphasis on a single specification, such as light throughput or weight or size. Then it makes sense to make a new design that excels at the most important specification.’

As an example, HySpex has just released a short-wave infrared (SWIR) camera that spans 640 spatial pixels. ‘In the SWIR world, this is a high pixel count,’ said Løke. ‘We are happy that we managed to keep the same per pixel image quality as in our SWIR 384 system. Of course, we are constantly working on developing new products, and I believe that in a year or two from now I will be able to mention another new HySpex system.’

Thanks to such products, hyperspectral imaging is being used ever more broadly, said Delta Optical Thin Film’s Pust. ‘It has been hyped for several years now, but it didn’t really pick up to become a really volume application,’ he said. ‘It’s now that people realise what you can do with it. They also see that there are now cameras on the market that are easier to handle for many more applications. So I see hyperspectral imaging getting broad acceptance and use in many different areas.’

Strong and stable: Industrial imaging lenses get robust upgrade

In recent years new ruggedised lenses have been designed for industrial applications that often involve harsh environments

Imaging lenses used in industrial machine vision applications often have special requirements beyond those of standard imaging lenses. In areas like factory automation, robotics and industrial inspection lenses need to be resistant to demanding environments involving vibration, shock, temperature changes and contaminants. In recent years new ruggedised lenses from Edmund Optics have been designed to meet these challenges. There are three distinct types available: industrial ruggedisation, Ingress protection, and stability ruggedisation. This article will outline the differences.

Industrial ruggedisation

Industrial ruggedised lenses are designed to survive vibration and shock without taking damage or changing focus or f/#. Flexibility is sacrificed by eliminating moving parts to achieve this. A standard fixed focal length lens uses a focus mechanism and an iris that is comprised of thin leaves and ball detents to adjust f/# which can spring out of place during shock and vibration. The iris is removed and replaced with a fixed aperture stop in industrial ruggedised lenses and the focusing mechanism, typically consisting of a focusing mechanism of a threaded barrel within another threaded barrel, is replaced by a single thread and rigid lock mechanism.

Figure 1: A standard imaging lens typically makes use of an adjustable iris, whereas an industrial ruggedised imaging lens uses more simplified mechanics

Industrial ruggedisation is ideal for applications where an imaging system is to be set up once and then not changed afterwards. One additional advantage when reducing the number of complex movable components from the lens design, is that static parts are usually less expensive than movable parts. Because of this, a significant cost saving is achieved at volume and passed along in the form of a mechanically simpler, more robust product. There are numerous applications for industrial ruggedised lenses, including high-vibration production-line environments where the camera is quickly accelerated or decelerated, inspection systems where multiple similar camera systems are set up, and autonomous systems such as self-driving cars and warehouse sorting robots.

Ingress protection

Ingress protection ruggedisation ensures a lens assembly is sealed using O-rings and RTV silicone to prevent moisture and debris from entering the lens. This protection is typically added to an industrial ruggedised lens as sealing an adjustable focus and iris would be problematic. These lenses are used in environments of high humidity/moisture, sputter, dust or small particles, and where space to fully enclose the lens and camera is not available.

Figure 2: An ingress protected ruggedised lens features an O-ring to seal out contaminants such as dust, dirt or moisture

Modern ingress protected lenses are rated IP67, IP68, or better. This means they are fully dust tight and can withstand direct pressure jets and full immersion at a given depth for a minimum amount of time, as outlined in the IP specifications.

Stability ruggedisation

Like industrial ruggedised lenses, stability ruggedisation protects the lens from damage, but also ensures optical pointing and positioning is maintained after shock and vibration. In addition to replacing the iris and a simplified focus mechanism, individual lens elements are glued in place to prevent them from moving. Figure 3 shows a stability ruggedised lens in which the lens elements are glued in place and a clamping lock is used to simplify focus.

Figure 3: A stability ruggedised lens assembly is composed of optical elements that are all glued in place

Lens elements sit in the inner bore of the barrel of an imaging lens assembly. Between the outer diameter of the lens and the inner diameter of the barrel is a small space, typically 50µm or less. Though this space is of very small magnitude, decentres of only tens of microns are sufficient in affecting the quality of the pointing stability of the lens (figure 4).

Figure 4: In an unperturbed system (a) with no shock or vibration, the object crosshairs are mapped to the image crosshair. In a system that has been perturbed by shock or vibration (b), lenses within the barrel decentre, causing a change in optical pointing stability and resulting in the object crosshairs becoming mapped to a different image location

For a stability ruggedised lens, if an object point is in the centre of the field of view and falls on the exact centre pixel, it will always fall on this same pixel even when the lens has been heavily shocked or vibrated (figure 5).

Figure 5: The pixel shift for a standard imaging lens (a) experiencing 50G of shock is more than one pixel, whereas the pixel shift for a stability ruggedised lens (b) under the same conditions is less than 1μm and much smaller than the size of pixel

Stability ruggedisation is an important manufacturing technique used for imaging lenses which will be used in applications where the field of view must be calibrated and maintained. These types of applications require imaging lenses to make accurate measurements and include 3D stereo vision, machine vision sensing for robotics, and for the tracking of moving object locations. For these applications, the required value for the acceptable amount of optical pointing stability is often much smaller than that of a single pixel.